一、项目背景

随着本人学习人工智能的深入,我发现家电行业渐渐地普及了人工智能,最常见的的语音识别。如语音控制电视、窗帘等,结合我所学习的讯飞语音api服务,可以实现语音的识别。为了巩固学习的内容,结合近期行空板M10扩展组合应用创作赛的活动,我计划做一个既可以语音生成图片又可以生成视频的瑞士军刀级别的工具。

使用讯飞提供的图像生成API可以通过图片的描述生成图片,也可以通过图片修改描述将上传的图像修改。同时可以使用硅基流动提供的视频生成API,结合生成视频的描述,轻松的生成视频。有了行空板M10扩展板的加持,我们可以不用连接电脑,即可实现无线(无限)可能。

二、项目成果(生成结果)

接着我们从零开始,一起来制作吧。

三、软硬件环境

硬件:

1.行空板

主控板,负责运行程序和处理数据。集成麦克风用于语音输入,集成显示屏用于显示提示信息、图片和视频内容。

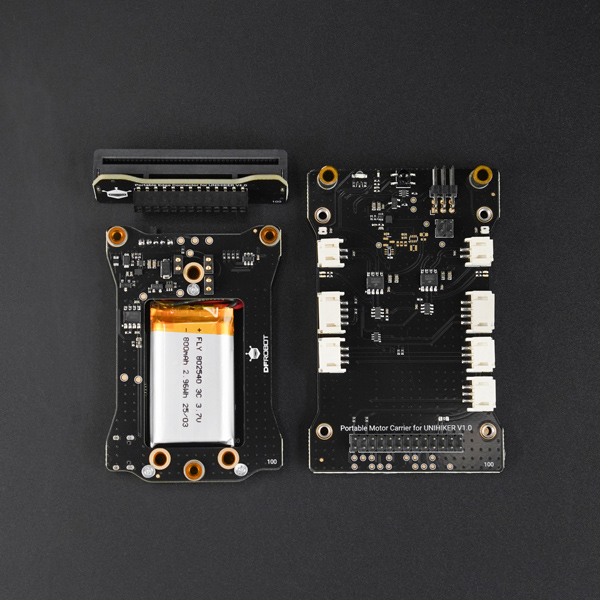

2.行空板M10扩展板组合(含电机IO扩展板、金手指扩展板、800mAh电池扩展板)

包括电机IO扩展板、金手指扩展板和800mAh电池扩展板。在这个项目中,主要利用800mAh电池扩展板为行空板提供稳定的移动电源,确保项目可以在没有外部电源的情况下运行。

3.组装行空板M10扩展版组合

最终可以实现无限连接了。

软件及环境:

1.mind+

适配uno、micro:bit、行空板的一款国产图形化编程软件,功能强大,支持python语言编辑,并上传到行空板。

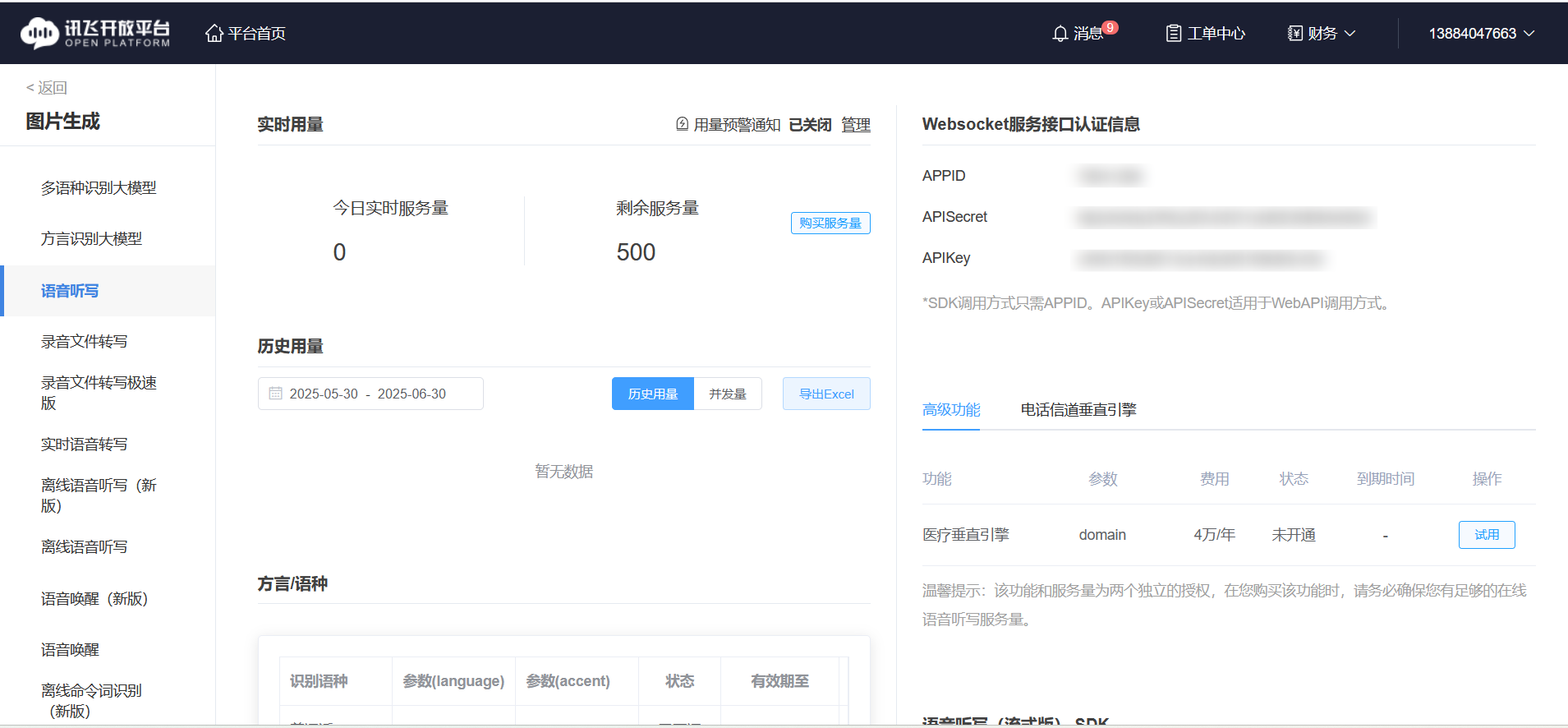

2.讯飞语音识别

可以即时将语音文件识别为文字,方便后来的程序调用。

3.讯飞图片生成HIDream

可以将文字描述生成对应的图片。

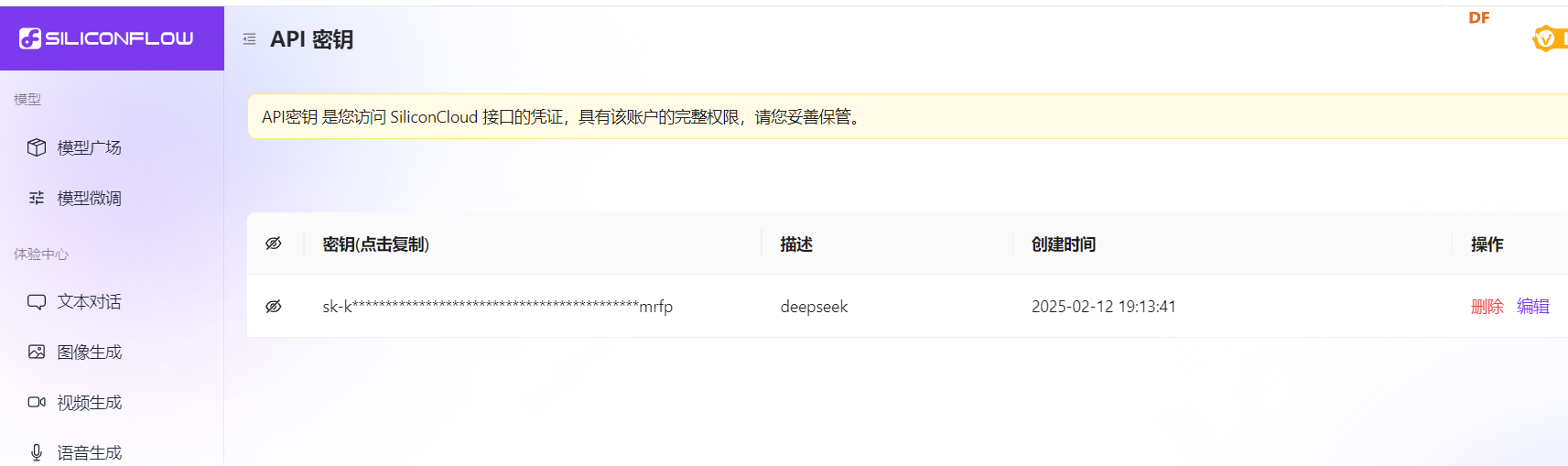

4.硅基流动API

可以根据描述场景的文本生成视频

四、程序设计思路

1.首先根据讯飞HIDream的使用方法,通过文字语言描述生成相应的图片。

示例程序如下:

# -*- encoding:utf-8 -*-

import base64

import hashlib

import hmac

import json

import time

import numpy as np

from datetime import datetime

from time import mktime

from urllib.parse import urlencode, urlparse

from wsgiref.handlers import format_date_time

from urllib import parse

from unihiker import GUI

import requests

import cv2

appid = "79541299" # 填写控制台中获取的 APPID 信息

apiSecret = "NjQzNzMyZWEyZDU0ZGYyM2E2MDMxNDdl" # 填写控制台中获取的 APISecret 信息

apiKey = "c94fd1f63d9513ce4dbd09169b85c43d" # 填写控制台中获取的 APIKey 信息

# 请求地址

create_host_url = "https://cn-huadong-1.xf-yun.com/v1/private/s3fd61810/create"

query_host_url = "https://cn-huadong-1.xf-yun.com/v1/private/s3fd61810/query"

def build_auth_request_url(request_url):

url_result = parse.urlparse(request_url)

date = "Thu, 09 May 2024 02:09:13 GMT" # format_date_time(mktime(datetime.now().timetuple()))

print(date)

method = "POST"

signature_origin = "host: {}\ndate: {}\n{} {} HTTP/1.1".format(url_result.hostname, date, method, url_result.path)

signature_sha = hmac.new(apiSecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = "api_key=\"%s\", algorithm=\"%s\", headers=\"%s\", signature=\"%s\"" % (

apiKey, "hmac-sha256", "host date request-line", signature_sha)

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

values = {

"host": url_result.hostname,

"date": date,

"authorization": authorization

}

return request_url + "?" + urlencode(values)

def create_url(url):

host = urlparse(url).netloc

path = urlparse(url).path

# 生成RFC1123格式的时间戳

now = datetime.now()

date = format_date_time(mktime(now.timetuple()))

# 拼接字符串

signature_origin = "host: " + host + "\n"

signature_origin += "date: " + date + "\n"

signature_origin += "POST " + path + " HTTP/1.1"

# 进行hmac-sha256进行加密

signature_sha = hmac.new(apiSecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha_base64 = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = f'api_key="{apiKey}", algorithm="hmac-sha256", headers="host date request-line", signature="{signature_sha_base64}"'

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

# 将请求的鉴权参数组合为字典

v = {

"authorization": authorization,

"date": date,

"host": host

}

# 拼接鉴权参数,生成url

reUrl = url + '?' + urlencode(v)

# print(reUrl)

# 此处打印出建立连接时候的url,参考本demo的时候可取消上方打印的注释,比对相同参数时生成的url与自己代码生成的url是否一致

return reUrl

def get_headers(url):

headers = {

'content-type': "application/json",

'host': urlparse(url).netloc,

'app_id': appid

}

return headers

def gen_create_request_data(text):

data = {

"header": {

"app_id": appid,

"status": 3,

"channel": "default",

"callback_url": "default",

},

"parameter": {

"oig": {

"result": {

"encoding": "utf8",

"compress": "raw",

"format": "json"

},

}

},

"payload": {

"oig": {

"text": text

},

},

}

return data

def create_task(prompt):

text = {

"image": [], #引擎上传的原图,如果仅用图片生成能力,该字段需为空

"prompt": prompt, # 该prompt 可以是要求引擎生成的描述,也可以结合上传的图片要求模型修改原图

"aspect_ratio": "1:1",

"negative_prompt": "",

"img_count": 4,

"resolution": "2k"

}

b_text = base64.b64encode(json.dumps(text).encode("utf-8")).decode()

request_url = create_url(create_host_url)

data = gen_create_request_data(b_text)

headers = get_headers(create_host_url)

response = requests.post(request_url, data=json.dumps(data), headers=headers)

# print(json.dumps(data))

# return

print('onMessage:\n' + response.text)

resp = json.loads(response.text)

taskid = resp['header']['task_id']

# print(taskid)

return taskid

def query_task(taskID):

data = {

"header": {

"app_id": appid,

"task_id": taskID # 填写创建任务时返回的task_id

}

}

request_url = create_url(query_host_url)

headers = get_headers(query_host_url)

response = requests.post(request_url, data=json.dumps(data), headers=headers)

res = json.loads(response.content)

return res

# 事件回调函数,当按钮B被点击时,清除GUI上的内容。

def on_buttonb_click_callback():

if(bs==2):

bs=0

cv2.destroyAllWindows()

u_gui.clear()

# 当按钮A被点击时,截取屏幕的一部分并保存为图片,然后清除GUI上的内容。

def on_buttona_click_callback():

global answer,text,bs,image,task_id

answer = "孙悟空大闹天宫"

task_id = create_task(answer)

bs=1

u_gui=GUI()

u_gui.on_a_click(on_buttona_click_callback)

u_gui.on_b_click(on_buttonb_click_callback)

if __name__ == '__main__':

# 创建任务

bs=0

while True:

#增加等待,防止程序退出和卡住

time.sleep(0.5)

if(bs==1):

# 创建窗口

cv2.namedWindow('Resized Image', cv2.WINDOW_NORMAL)

# 设置窗口属性为全屏

cv2.setWindowProperty('Resized Image', cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

while(True):

print(datetime.now())

res = query_task(task_id)

code = res["header"]["code"]

task_status = ''

if code == 0:

task_status = res["header"]["task_status"]

if ('' == task_status):

print("查询任务状态有误,请检查")

elif('3' == task_status):

print(datetime.now())

print("任务完成")

print(res)

f_text = res["payload"]["result"]["text"]

# 解码Base64字符串

decoded_text = base64.b64decode(f_text).decode('utf-8')

# 将JSON字符串转换为Python对象

data = json.loads(decoded_text)

# 检查data是否是列表,如果是,则遍历列表

if isinstance(data, list):

for item in data:

# 提取image_wm字段

image_wm = item.get('image_wm', '')

if image_wm: # 如果image_wm字段存在

print(image_wm)

response = requests.get(image_wm)

# 确保请求成功

if response.status_code == 200:

# 将图片数据转换为numpy数组

image_array = np.asarray(bytearray(response.content), dtype="uint8")

# 使用cv2.imdecode读取图片数据

image = cv2.imdecode(image_array, cv2.IMREAD_COLOR)

# 调整图片大小到240x320

image_resized = cv2.resize(image, (240, 320), interpolation=cv2.INTER_AREA)

bs=2

break

else:

print("查询任务中:......" + json.dumps(res))

continue

else:

print(res)

if(bs==2):

cv2.imshow("Resized Image",image_resized)

cv2.waitKey(20)

使用孙悟空大闹天宫来描述,可以得到以下图片:

好像有一点点国风的味道,但跟孙悟空有点差别,我描述不好的原因吧

2.根据大佬云天的一句话视频,参照大佬的程序并做了很多的修改,适应我的程序,大佬的原程序代码如下:

# -*- coding: UTF-8 -*-

# MindPlus

# Python

import json

import time,sys

sys.path.append("/root/mindplus/.lib/thirdExtension/liliang-xunfeiyuyin-thirdex")

import xunfeiasr

from unihiker import GUI

from unihiker import Audio

import requests

import cv2

# 定义API URL和请求头S

url_submit = "https://api.siliconflow.cn/v1/video/submit"

url_status = "https://api.siliconflow.cn/v1/video/status"

headers = {

"Authorization": "Bearer sk-kxwsrzianqfxsebnihblrgyyytrrtgvvdjvdiujcuvwymrfp",

"Content-Type": "application/json"

}

# 初始化变量

requestStatus = ""

bs = 0

payload = {}

u_gui = GUI()

u_audio = Audio()

# 事件回调函数

def on_buttona_click_callback():

global u_audio,bs2

显示.config(text="开始录音")

显示.config(x=50)

显示.config(font_size=30)

print("开始录音")

time.sleep(0.1)

u_audio.start_record("record.wav")

def on_buttonb_click_callback():

global u_audio, url_submit, url_status, headers, requestStatus, bs, payload,xunfeiasr

u_audio.stop_record()

显示.config(text="开始识别")

显示.config(x=50)

显示.config(font_size=30)

print("停止录音,开始识别")

text = xunfeiasr.xunfeiasr(r"record.wav")

time.sleep(3)

显示.config(x=0)

显示.config(font_size=20)

print(f"识别结果:{text}")

wrap_text(text)

# 提交视频生成请求

payload = {

"model": "Wan-AI/Wan2.1-T2V-14B",

"prompt": text,

"negative_prompt": "模糊",

"image_size": "960x960",

"seed": 123

}

try:

response = requests.post(url_submit, json=payload, headers=headers)

response.raise_for_status() # 检查请求是否成功

response_json = response.json()

requestId = response_json.get("requestId")

print(f"提交成功,requestId: {requestId}")

# 查询视频生成状态

payload = {"requestId": requestId}

bs = 1

except requests.exceptions.RequestException as e:

print(f"提交请求失败:{e}")

# 屏幕宽度(以像素为单位)

SCREEN_WIDTH = 240

# 字符宽度

CHAR_WIDTH = 30

def wrap_text(text):

wrapped_text = ""

current_line = ""

current_line_width = 0

for char in text:

if char == '\r':

# 如果遇到 \r,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = ""

current_line_width = 0

else:

# 检查当前行加上新字符是否超出屏幕宽度

if current_line_width + CHAR_WIDTH > SCREEN_WIDTH:

# 如果超出屏幕宽度,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = char

current_line_width = CHAR_WIDTH

else:

current_line += char

current_line_width += CHAR_WIDTH

# 添加最后一行

if current_line:

wrapped_text += current_line

wrapped_text+="\r\r视频正在生成中……"

显示.config(text=wrapped_text)

# 设置按键回调

u_gui.on_a_click(on_buttona_click_callback)

u_gui.on_b_click(on_buttonb_click_callback)

u_gui.draw_text(text="行空板",x=60,y=20,font_size=30, color="#0000FF")

显示=u_gui.draw_text(text="一句话生成视频",x=20,y=100,font_size=20, color="#FF0000")

# 设置讯飞语音识别参数

xunfeiasr.xunfeiasr_set(APPID="*****", APISecret="*****", APIKey="*****")

bs2=0

# 主循环

while True:

if bs == 1:

try:

response = requests.post(url_status, json=payload, headers=headers)

response.raise_for_status() # 检查请求是否成功

response_json = response.json()

requestStatus = response_json.get("status")

print(f"查询状态:{requestStatus}")

if requestStatus == "Succeed":

bs = 0

video_url = response_json['results']['videos'][0]['url']

print(f"视频URL:{video_url}")

# 播放视频

cap = cv2.VideoCapture(video_url)

if not cap.isOpened():

print("无法打开视频流,请检查URL是否有效")

else:

print("成功打开视频流")

# 创建窗口并设置窗口大小

cv2.namedWindow('Video', cv2.WINDOW_NORMAL)

#cv2.resizeWindow('Video', 240, 320) # 设置窗口大小为240x320

cv2.setWindowProperty("Video", cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

bs2=1

last_frame = None # 用于保存最后一帧

# 设置帧率(每秒25帧)

fps = 25

frame_delay = int(1000 / fps) # 每帧的显示时间(毫秒)

# 循环读取视频帧

while True:

ret, frame = cap.read()

if ret:

last_frame = frame.copy() # 保存当前帧为最后一帧

cv2.imshow('Video', frame)

else:

print("视频流播放完成")

break

if cv2.waitKey(frame_delay) == ord('q'):

break

# 释放资源

cap.release()

cv2.destroyAllWindows()

except requests.exceptions.RequestException as e:

print(f"查询状态失败:{e}")

time.sleep(3) # 等待一段时间后重试3.结合我的想法,制作一个集生成图片和视频为一体的仿瑞士军刀工具。

代码如下:

# -*- encoding:utf-8 -*-

import base64

import hashlib

import hmac

import json

import time,sys

import numpy as np

import requests

import cv2

import xunfeiasr

import requests

import cv2

from unihiker import Audio

from datetime import datetime

from time import mktime

from urllib.parse import urlencode, urlparse

from wsgiref.handlers import format_date_time

from urllib import parse

from unihiker import GUI

appid = "****************" # 填写控制台中获取的 APPID 信息

apiSecret = "****************" # 填写控制台中获取的 APISecret 信息

apiKey = "****************" # 填写控制台中获取的 APIKey 信息

# 请求地址

create_host_url = "https://cn-huadong-1.xf-yun.com/v1/private/s3fd61810/create"

query_host_url = "https://cn-huadong-1.xf-yun.com/v1/private/s3fd61810/query"

def build_auth_request_url(request_url):

url_result = parse.urlparse(request_url)

date = "Thu, 09 May 2024 02:09:13 GMT" # format_date_time(mktime(datetime.now().timetuple()))

print(date)

method = "POST"

signature_origin = "host: {}\ndate: {}\n{} {} HTTP/1.1".format(url_result.hostname, date, method, url_result.path)

signature_sha = hmac.new(apiSecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = "api_key=\"%s\", algorithm=\"%s\", headers=\"%s\", signature=\"%s\"" % (

apiKey, "hmac-sha256", "host date request-line", signature_sha)

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

values = {

"host": url_result.hostname,

"date": date,

"authorization": authorization

}

return request_url + "?" + urlencode(values)

def create_url(url):

host = urlparse(url).netloc

path = urlparse(url).path

# 生成RFC1123格式的时间戳

now = datetime.now()

date = format_date_time(mktime(now.timetuple()))

# 拼接字符串

signature_origin = "host: " + host + "\n"

signature_origin += "date: " + date + "\n"

signature_origin += "POST " + path + " HTTP/1.1"

# 进行hmac-sha256进行加密

signature_sha = hmac.new(apiSecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha_base64 = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = f'api_key="{apiKey}", algorithm="hmac-sha256", headers="host date request-line", signature="{signature_sha_base64}"'

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

# 将请求的鉴权参数组合为字典

v = {

"authorization": authorization,

"date": date,

"host": host

}

# 拼接鉴权参数,生成url

reUrl = url + '?' + urlencode(v)

# print(reUrl)

# 此处打印出建立连接时候的url,参考本demo的时候可取消上方打印的注释,比对相同参数时生成的url与自己代码生成的url是否一致

return reUrl

def get_headers(url):

headers = {

'content-type': "application/json",

'host': urlparse(url).netloc,

'app_id': appid

}

return headers

def gen_create_request_data(text):

data = {

"header": {

"app_id": appid,

"status": 3,

"channel": "default",

"callback_url": "default",

},

"parameter": {

"oig": {

"result": {

"encoding": "utf8",

"compress": "raw",

"format": "json"

},

}

},

"payload": {

"oig": {

"text": text

},

},

}

return data

def create_task(prompt):

text = {

"image": [], #引擎上传的原图,如果仅用图片生成能力,该字段需为空

"prompt": prompt, # 该prompt 可以是要求引擎生成的描述,也可以结合上传的图片要求模型修改原图

"aspect_ratio": "1:1",

"negative_prompt": "",

"img_count": 4,

"resolution": "2k"

}

b_text = base64.b64encode(json.dumps(text).encode("utf-8")).decode()

request_url = create_url(create_host_url)

data = gen_create_request_data(b_text)

headers = get_headers(create_host_url)

response = requests.post(request_url, data=json.dumps(data), headers=headers)

# print(json.dumps(data))

# return

print('onMessage:\n' + response.text)

resp = json.loads(response.text)

taskid = resp['header']['task_id']

# print(taskid)

return taskid

def query_task(taskID):

data = {

"header": {

"app_id": appid,

"task_id": taskID # 填写创建任务时返回的task_id

}

}

request_url = create_url(query_host_url)

headers = get_headers(query_host_url)

response = requests.post(request_url, data=json.dumps(data), headers=headers)

#print("response:",response)

res = json.loads(response.content)

#print(res)

return res

def wenshengtu(answer= "孙悟空大闹天宫"):

task_id = create_task(answer)

#print(task_id)

while(True):

#print(datetime.now())

res = query_task(task_id)

time.sleep(2)

#print(res)

code = res["header"]["code"]

task_status = ''

if code == 0:

task_status = res["header"]["task_status"]

if ('' == task_status):

print("查询任务状态有误,请检查")

elif('3' == task_status):

print(datetime.now())

print("任务完成")

print(res)

f_text = res["payload"]["result"]["text"]

# 解码Base64字符串

decoded_text = base64.b64decode(f_text).decode('utf-8')

# 将JSON字符串转换为Python对象

data = json.loads(decoded_text)

# 检查data是否是列表,如果是,则遍历列表

if isinstance(data, list):

for item in data:

# 提取image_wm字段

image_wm = item.get('image_wm', '')

if image_wm: # 如果image_wm字段存在

print(image_wm)

response = requests.get(image_wm)

#print("response.status_code:",response.status_code)

# 确保请求成功

if response.status_code == 200:

# 将图片数据转换为numpy数组

image_array = np.asarray(bytearray(response.content), dtype="uint8")

# 使用cv2.imdecode读取图片数据

image = cv2.imdecode(image_array, cv2.IMREAD_COLOR)

# 调整图片大小到240x320

image_resized = cv2.resize(image, (240, 320), interpolation=cv2.INTER_AREA)

#print("image_resized:",image_resized)

return image_resized

#break

else:

print("查询任务中:......" + json.dumps(res))

continue

else:

print(res)

#return image_resized

def show_pic(image_resized):

# 创建窗口

cv2.namedWindow('Resized Image', cv2.WINDOW_NORMAL)

# 设置窗口属性为全屏

cv2.setWindowProperty('Resized Image', cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

cv2.imshow("Resized Image",image_resized)

cv2.waitKey(20)

def yuyinshibie():

global u_audio,xunfeiasr

显示.config(text="开始识别")

显示.config(x=50)

显示.config(font_size=30)

print("停止录音,开始识别")

text = xunfeiasr.xunfeiasr(r"record.wav")

time.sleep(3)

显示.config(x=0)

显示.config(font_size=20)

print(f"识别结果:{text}")

return text

def wrap_text(text):

wrapped_text = ""

current_line = ""

current_line_width = 0

for char in text:

if char == '\r':

# 如果遇到 \r,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = ""

current_line_width = 0

else:

# 检查当前行加上新字符是否超出屏幕宽度

if current_line_width + CHAR_WIDTH > SCREEN_WIDTH:

# 如果超出屏幕宽度,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = char

current_line_width = CHAR_WIDTH

else:

current_line += char

current_line_width += CHAR_WIDTH

# 添加最后一行

if current_line:

wrapped_text += current_line

wrapped_text+="\r\r视频正在生成中……"

显示.config(text=wrapped_text)

def wrap_text1(text):

wrapped_text = ""

current_line = ""

current_line_width = 0

for char in text:

if char == '\r':

# 如果遇到 \r,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = ""

current_line_width = 0

else:

# 检查当前行加上新字符是否超出屏幕宽度

if current_line_width + CHAR_WIDTH > SCREEN_WIDTH:

# 如果超出屏幕宽度,将当前行加入到 wrapped_text 中,并开始新的一行

wrapped_text += current_line + "\n"

current_line = char

current_line_width = CHAR_WIDTH

else:

current_line += char

current_line_width += CHAR_WIDTH

# 添加最后一行

if current_line:

wrapped_text += current_line

wrapped_text+="\r\r图片正在生成中……"

显示.config(text=wrapped_text)

def shipinshengcheng(text):

# 提交视频生成请求

payload = {

"model": "Wan-AI/Wan2.1-T2V-14B",

"prompt": text,

"negative_prompt": "模糊",

"image_size": "960x960",

"seed": 123

}

try:

response = requests.post(url_submit, json=payload, headers=headers)

time.sleep(1)

response.raise_for_status() # 检查请求是否成功

response_json = response.json()

requestId = response_json.get("requestId")

# 等待一段时间后重试

print(f"提交成功,requestId: {requestId}")

# 查询视频生成状态

payload = {"requestId": requestId}

except requests.exceptions.RequestException as e:

print(f"提交请求失败:{e}")

while True:

#获得 payload 参数后重新提交。

try:

response = requests.post(url_status, json=payload, headers=headers)

#time.sleep(2) #加入延时,等待数据

response.raise_for_status() # 检查请求是否成功

response_json = response.json()

requestStatus = response_json.get("status")

print(f"查询状态:{requestStatus}")

if requestStatus == "Succeed":

bs = 0

video_url = response_json['results']['videos'][0]['url']

print(f"视频URL:{video_url}")

# 播放视频

cap = cv2.VideoCapture(video_url)

if not cap.isOpened():

print("无法打开视频流,请检查URL是否有效")

else:

print("成功打开视频流")

# 创建窗口并设置窗口大小

cv2.namedWindow('Video', cv2.WINDOW_NORMAL)

#cv2.resizeWindow('Video', 240, 320) # 设置窗口大小为240x320

cv2.setWindowProperty("Video", cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

bs2=1

last_frame = None # 用于保存最后一帧

# 设置帧率(每秒25帧)

fps = 25

frame_delay = int(1000 / fps) # 每帧的显示时间(毫秒)

# 循环读取视频帧

while True:

ret, frame = cap.read()

if ret:

last_frame = frame.copy() # 保存当前帧为最后一帧

cv2.imshow('Video', frame)

else:

print("视频流播放完成")

break

if cv2.waitKey(frame_delay) == ord('q'):

break

# 释放资源

cap.release()

cv2.destroyAllWindows()

except requests.exceptions.RequestException as e:

print(f"查询状态失败:{e}")

time.sleep(3) # 等待一段时间后重试

# 事件回调函数

def on_buttona_click_callback():

global u_audio,bs

u_audio.stop_record()

bs=1

def on_buttonb_click_callback():

global chengxu

chengxu=0

def button_click1():

global chengxu,u_audio

显示.config(text="开始录音")

显示.config(x=50)

显示.config(font_size=30)

print("开始录音")

time.sleep(0.1)

u_audio.start_record("record.wav")

chengxu=1

button1.remove()

button2.remove()

def button_click2():

global u_audio,chengxu

显示.config(text="开始录音")

显示.config(x=50)

显示.config(font_size=30)

print("开始录音")

time.sleep(0.1)

u_audio.start_record("record.wav")

chengxu=2

sys.path.append("/root/mindplus/.lib/thirdExtension/liliang-xunfeiyuyin-thirdex")

# 定义API URL和请求头S

url_submit = "https://api.siliconflow.cn/v1/video/submit"

url_status = "https://api.siliconflow.cn/v1/video/status"

headers = {

"Authorization": "Bearer sk-kxwsrzianqfxsebnihblrgyyytrrtgvvdjvdiujcuvwymrfp",

"Content-Type": "application/json"

}

# 初始化变量

requestStatus = ""

bs = 0

chengxu=0

payload = {}

u_gui = GUI()

u_audio = Audio()

xunfeiasr.xunfeiasr_set(APPID="****************", APISecret="****************", APIKey="****************")

标题=u_gui.draw_text(text="行空板智能生成",x=10,y=20,font_size=22, color="#0000FF")

显示=u_gui.draw_text(text="点击以下按钮开始",x=0,y=100,font_size=20, color="#0000FF")

button1=u_gui.add_button(text=" 语音生成图片",x=0,y=220,w=240,h=30,onclick=button_click1)

button2=u_gui.add_button(text=" 语音生成视频",x=0,y=280,w=240,h=30,onclick=button_click2)

# 屏幕宽度(以像素为单位)

SCREEN_WIDTH = 240

# 字符宽度

CHAR_WIDTH = 30

# 设置按键回调

u_gui.on_a_click(on_buttona_click_callback)

u_gui.on_b_click(on_buttonb_click_callback)

if __name__ == '__main__':

while True:

#print("chengxu:",chengxu)

time.sleep(0.05)

if chengxu==1:

标题.config(text="行空板图片生成")

if bs==1:

text=yuyinshibie()

wrap_text1(text)

image_resized=wenshengtu(text)

while True:

show_pic(image_resized)

if chengxu==0:

break

elif chengxu==2:

标题.config(text="行空板视频生成")

if bs==1:

text=yuyinshibie()

wrap_text(text)

shipinshengcheng(text)

以下是运行结果:

视频有剪辑,其生成时间大约为5-6分钟左右,图片生成世界短一点,大约2分钟左右

五、行空板 M10 扩展板组合在项目中的关键作用

行空板 M10 扩展板组合在本项目中扮演了极为关键的角色,其各个组件的独特功能为项目的顺利实施提供了坚实支撑。

1.800mAh 电池扩展板堪称项目的“能量源泉”。在许多实际应用场景中,外部电源的接入往往受限,800mAh 电池扩展板凭借其适中的容量,能够为行空板提供长时间且稳定的电力支持,让项目摆脱了对固定电源插座的依赖。这使得项目可以在教室、实验室、活动现场甚至户外等不同场所灵活开展,极大地拓展了项目的应用范围,提升了项目的实用性。无论是在校园的科技展示活动中,让学生们在操场中央就能演示项目,还是在社区科普活动中,方便工作人员在各个小区灵活展示,800mAh 电池扩展板都以其可靠的移动电源功能,保障了项目随时随地都能稳定运行,为项目注入了强大的生命力。

2.金手指扩展板则为项目带来了强大的接口扩展功能。在项目实施过程中,常常需要连接各种外设来丰富项目功能,如传感器、显示屏、通信模块等。金手指扩展板提供了丰富的接口资源,这些接口能够满足多种外设的连接需求。这种强大的连接能力,使得项目能够根据实际需求灵活扩展功能,将各种外设整合到项目系统中,打造出功能更强大、应用更广泛的智能项目,为项目的创新和拓展提供了无限可能。

六、感想感言

通过学习大佬的作品,让我们小白成长了许多。活动不仅带给我们紧张和刺激,而且让我们学习到了知识,这里感谢组织活动的主办方,感谢默默支持的技术顾问,同时感谢各位大佬,让我体会到了行空板的精彩。

返回首页

返回首页

回到顶部

回到顶部

评论